Dear yaroslav,

Thank you for the beneficial information. It is much appreciated.

The following is information regarding StarWind vSAN as a Linux-based CVM vs. Windows executable in Microsoft Hyper-V:

There are two ways to deploy StarWind Virtual SAN for Microsoft Hyper-V:

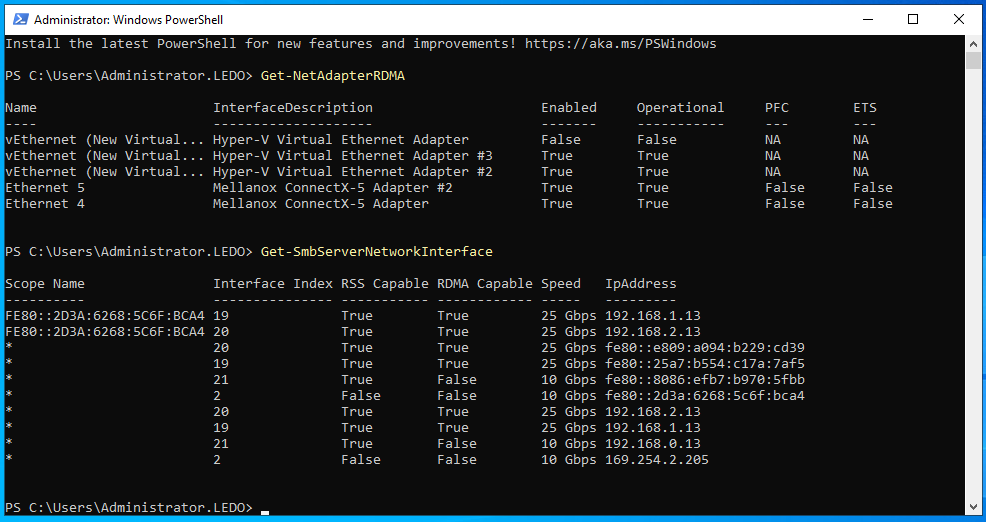

As a Linux-based Controller Virtual Machine (CVM) and as a Windows executable. CVM approach is actually preferred because it has advanced features like ZFS, NVMe-oF support, web UI management, AI-powered telemetry and so on, all unavailable in Windows version. CVM has better performance due to the fact Linux has faster storage and network stacks and our proprietary version of it uses polling to avoid interrupts handling and context switches, which is good for reduced CPU usage and lower I/O latency. By using PCI pass-through technology, Controller Virtual Machine is granted direct access to the physical devices, so no virtualization overhead. Windows version being fully supported in production is a bit easier to install and evaluate, though... We encourage you to use Linux-based CVM during evaluation and production, unless absolutely necessary in your particular case!

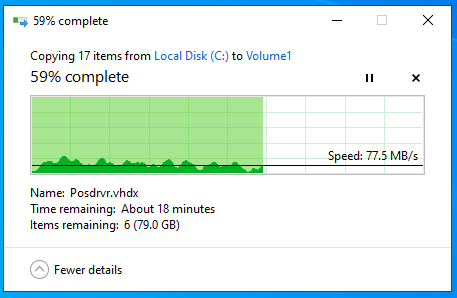

For our situation the advantages of each method are as follows:

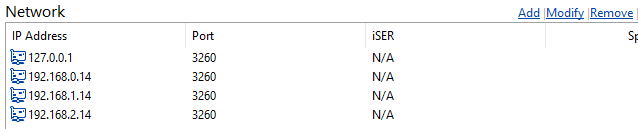

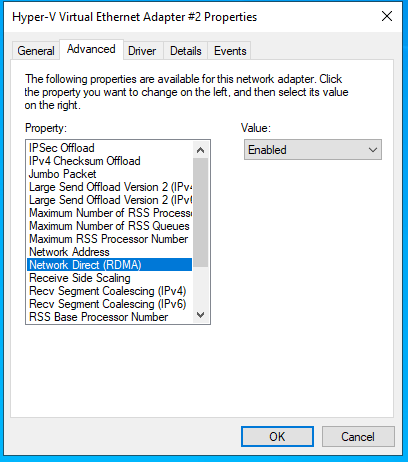

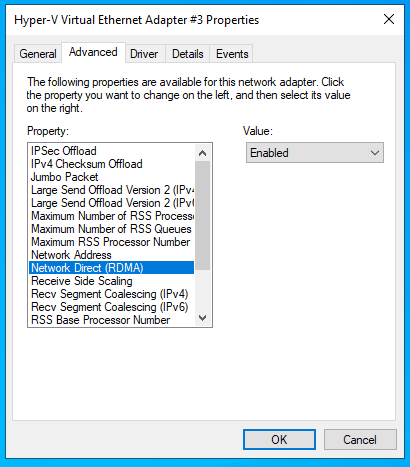

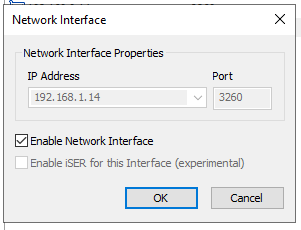

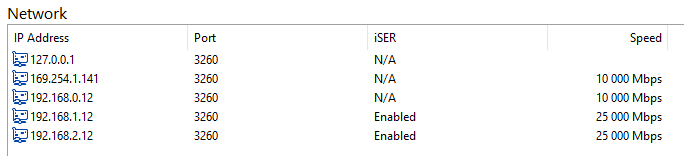

Windows executable: iSER support

Linux-based CVM: faster storage and network stacks, polling to avoid interrupts handling and context switches

We have the option to deploy StarWind vSAN in Hyper-V as a CVM or as an executable. Based on the preceding information, which would you recommend as the final method? Once finalized, further optimization of the hyper-converged infrastructure can be assessed.

Thank you again for your time.