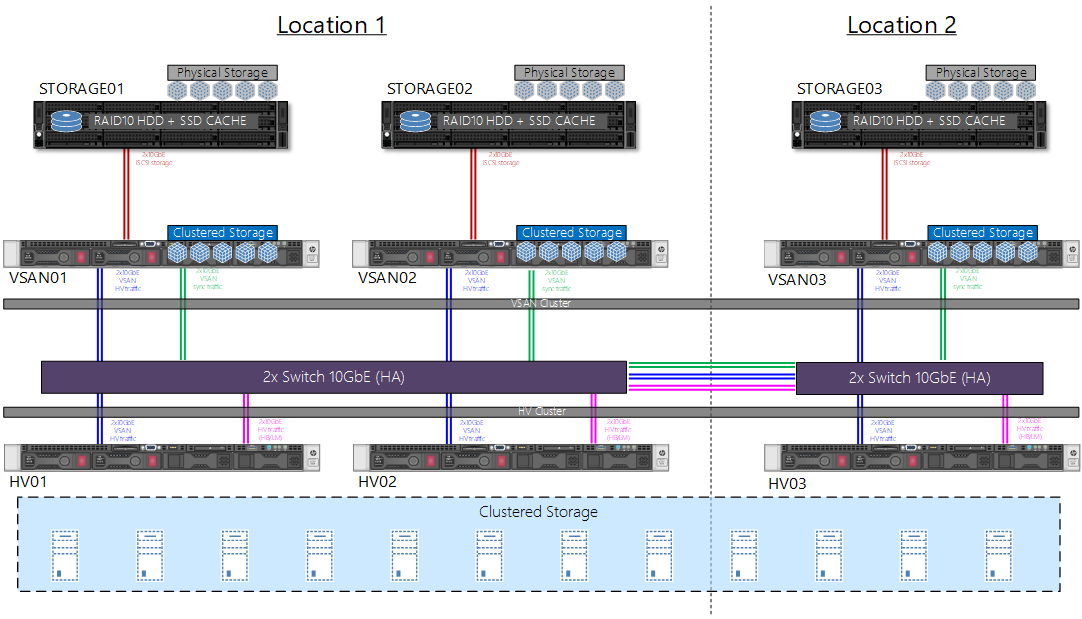

Currently, there is a Windows Server 2019 Hyper-V cluster that consists of a 3 nodes connected to 2 storage appliances that contains HDDs and SSDs for cache and they are exposed via iSCSI 2x10GbE connections and .

Moving forward to optimize current solution, we'd like to use StarWind VSAN Free in order to build 3-node VSAN cluster on top of 3 storage appliances (+1) which will be serving clustered storage for the HV cluster.

Basically, we'd like to re-use existing storage appliances so they will be serving physical storage for each VSAN node instead of direct-attached disks in VSAN nodes.

Notes:

- there are 2 locations - different buildings at same site, connected via Nx10GbE so bandwidth and latency not an issue

- network is running on reduntant 10GbE connections

- server NICs support RDMA, have full TCP/iSCSI offloads

- storage appliances has 10x8TB HDD + 2x900GB SSD (for caching purposes), exposes itself over iSCSI/SMB and can do ODX

- VSAN nodes would run Win2019 Std

- HV nodes are running Win2019 Dcntr

- VSAN cluster shall use synchronous replication

- ultimate goal is performance, robustness and high availability (especially during nodes/storage failures)

Now, the questions:

- what should be the method of exposing clustered storage from VSAN to HV

- 1a) shall VSAN cluster serve SOFS SMB3 clustered storage to HV nodes as per your comment "microsoft-to-microsoft"?

1b) or HV cluster shall be connected via old-school iSCSI to VSAN cluster?

- 1a) shall VSAN cluster serve SOFS SMB3 clustered storage to HV nodes as per your comment "microsoft-to-microsoft"?

- is VSAN Free license fully capable of serving this scenario (except GUI management panel) ?

- could you confirm network bandwidth requirements in this scenario (especially inter-VSAN ones) ?

- could you confirm network connections between VSAN nodes for HA/sync?

- is 32GB of RAM enough on VSAN nodes for this configuration (not planning to use LSFS)?

- what would be the best configuration for physical storage appliance in order to expose its storage to VSAN nodes?

- 6a) each HDD and SSD separately to VSAN

6b) each HDD separately to VSAN, but leave SSD for caching on storage side

6c) RAID-10, single big LUN (40TB) to VSAN

6d) RAID-10, multiple smaller LUNs to VSAN

- 6a) each HDD and SSD separately to VSAN

Best Regards,

Tomasz