Page 1 of 2

Expected Speeds

Posted: Fri Dec 20, 2013 10:04 pm

by CCSNET_Steve

Hey All,

I have just setup a starwind test box after finding the product over the last week.

the test rig contains a DL580G5 4xQC & 32Gb of ram running W2012

connected via a p800 is 36x300Gb 15K sas drives (external using MSA60s)

to test i have created a single LogicalVolume containing all 36 in a Raid10(128 or 256 blocksize i forget which), then presented this to windows as a GPT volume. file copies within windows are given me around 500Mbs transfer (i.e. if i copy and paste to this disk array)

within starwind i have created a 100Gb device and presented it to my ESX cluster, i Migrated a VM to the presented LUN to test the speed of transfer within the VM, I found i could only transfer at 25Mbs, even with 2 NICs in a clustered device (forgive me if this is wrong term i'm still getting to grips with starwind) but with the clustered setup i was still only getting 30-50Mbs

all iSCSI cards are gigabit, the LUN was setup with roundrobin (default config not the 1 io adjustement)

is this expected throughput of this system if so why and how can i improve it ???

regards

Re: Expected Speeds

Posted: Mon Dec 23, 2013 12:37 pm

by jeddyatcc

Typically you will lose 10-20% of native speeds for iSCSI depending on HA and a ton of other variables, but in your case at the bus layer you have a problem.

SAS typically = 6Gbps so you would need 6 1Gbps cards to even make that a fair fight(probably 7 depending on the length of the run and whether you are using fiber). For a single 1Gbps connection the maximum throughput 112MB/s, while you aren't even getting that you have too many variables to pin it down. I suggest using a RAM disk for testing the network, you should be able to push 80-100 MB/s on a single 1Gbps port that is dedicated to iSCSI traffic, if you cannot then there is something wrong in the network/vmware configuration. To test this, you can connect to the Starwind from any test device and you should be all good. One thing to remember when doing testing like this, always copy files from the fastest medium you have(memory or local SSD) if you are reading from a local c drive, your speeds can be very limited. Also, when using 1Gbps connections iSCSI hates copy and paste from the same volume, not mater what back end you use(I have tried 5 different ones), 10Gbps helps a lot with this, but you are much better off having multiple connections. I apologize for the brain dump, but I had to do a ton of testing to validate my request for new switches and a dedicated iSCSI network.

Re: Expected Speeds

Posted: Sun Dec 29, 2013 11:27 am

by CCSNET_Steve

Thanks for the reply and no problem Ref the Braindump

currently i have 2 1Gb Cards in the ESX hosts and a Quadport 1Gb card in the Starwind box, I've now set up the round robin to 1 iops, and am no seeing a copy/paste start at around 150MB/s which drops to around 60MB/s after around 2Gb of copy (of a 4Gb iso)

I take onboard your infomation ref the copy / paste, honestly i don't think there's budget to upgrade the iSCSI to 10Gbe (it is currently on its own network though so there's a little win there).

I might be able to get hold of another couple of quad port cards and then run MPIO between 6 1Gb ports on the ESX and 8 on the starwind box.

Regards

Re: Expected Speeds

Posted: Mon Dec 30, 2013 10:57 am

by Anatoly (staff)

Can I ask you to provide us with following information:

· Detailed network diagram of SAN system

· Description of the actions that were performed before/at the time of the issue

· Operating system on servers participating in iSCSI SAN and client server OS

· RAID array model, RAID level and stripe size used, caching mode of the array used to store HA images, Windows volume allocation unit size

· NIC models, driver versions (driver manufacturer/release date) and NIC advanced settings (Jumbo Frames, iSCSI offload etc.)

Also, there is a document for pre-production SAN benchmarking:

http://www.starwindsoftware.com/starwin ... ice-manual

And a list of advanced settings which should be implemented in order to gain higher performance in iSCSI environments:

http://www.starwindsoftware.com/forums/ ... t2293.html

http://www.starwindsoftware.com/forums/ ... t2296.html

Please provide me with the requested information and I will be able to further assist you in the troubleshooting

Also I want to let you know that increasing of number of hte NICs should increase the performance as well.

Re: Expected Speeds

Posted: Mon Dec 30, 2013 5:10 pm

by CCSNET_Steve

Hi Thanks for your message, i'll compile everything for you

Regards

Re: Expected Speeds

Posted: Mon Dec 30, 2013 5:28 pm

by CCSNET_Steve

Hardware Wise for the Starwind iSCSI Box

DL580 G5

System

4 x QuadCore Intel(R) Xeon(TM) Processor MP 2.4 GHz (x64 Family 6 Model 15 Stepping 11)

32Gb RAM

Running Windows 2012R2

Networking

Normal - dual onboard broadcom nics

ISCSI - Quadport Intel Pro1000 PT

Driver is Intel 9.15.11.0

Jumbo Frames is set to 9014

all other options are left at default

Arrays Raw

P400 Card - 8 x 146Gb 10K SAS

Driver Version 8.0.4.0

Firmware 7.22

P800(1) Card - 36 x 300Gb 15k SAS

Driver Version 8.0.4.0

Firmware 7.22

P800(2) Card - 25 x 300Gb 10k SAS

Driver Version 8.0.4.0

Firmware 7.22

Arrays Presented to OS

A1 = p800(1) = 8 x 15k 300Gb - Raid 10 - 256kb Stripe

A2 = P800(2) = 6 x 10k 200Gb - Raid 10 - 128Kb Stripe

OS View

A1 = single volume 1090Gb - Default Cluster Size presented as GPT

A2 = single volume 837Gb - Default Cluster Size presented as GPT

Re: Expected Speeds

Posted: Mon Dec 30, 2013 9:54 pm

by Anatoly (staff)

May I ask you if you have benchmarked your system according to our guide?

Re: Expected Speeds

Posted: Mon Dec 30, 2013 11:19 pm

by CCSNET_Steve

I have seen the document, i have run some ATTO tests and will use any recommendations in the document tomorrow to test.

Re: Expected Speeds

Posted: Tue Dec 31, 2013 11:21 am

by CCSNET_Steve

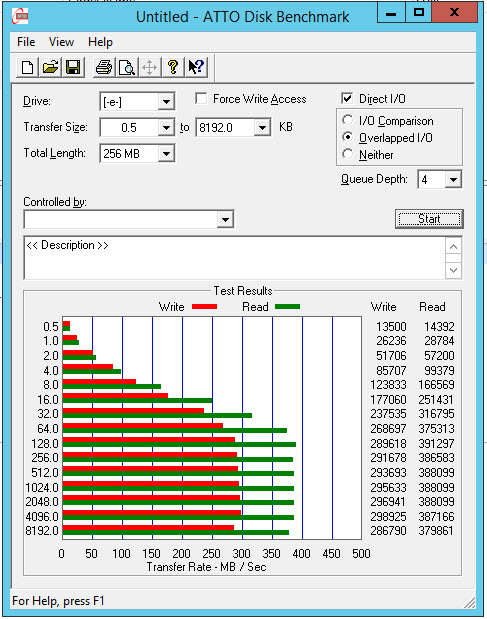

This shows the speed on the local disk

- Local Disk ATTO

- LocalDisk.jpg (111.48 KiB) Viewed 15889 times

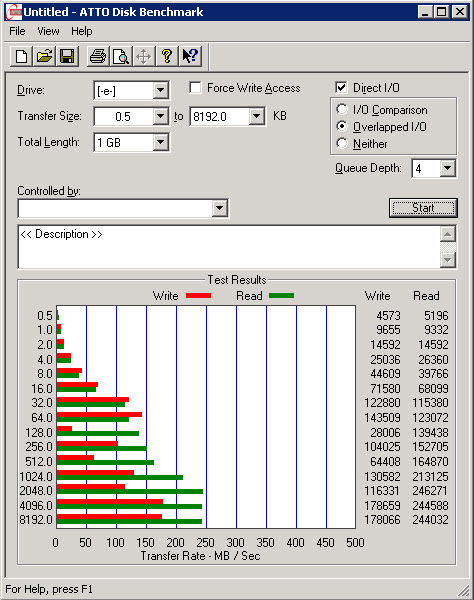

this is the speed via the ESX host i was testing, this has multiple iSCSI connections iops set 1 etc...

- RAM DISK via ESX Host

- RAMDISK-ESX.jpg (89.76 KiB) Viewed 15889 times

obviously some issues here

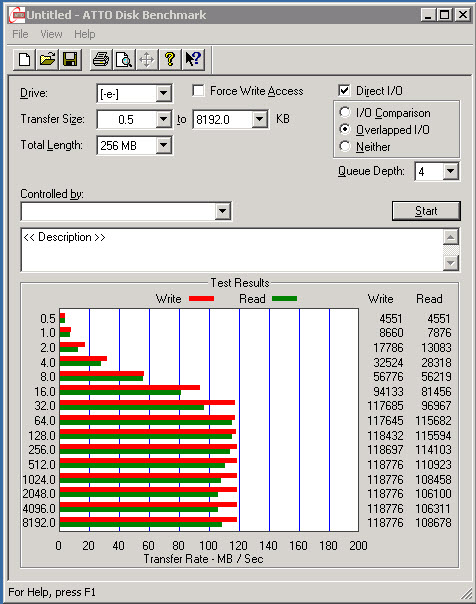

so i connected a physical server (dell 1950 III, on board nic) to the ram disk and i get this

- RAMDISK on a physical host single ISCSI Connection

- RAMDISK-PhysicalHost.jpg (93.38 KiB) Viewed 15889 times

which is about the expected speed of a single connection.

so it looks like my issues are coming from the ESX iSCSI connection

whats the next steps ? it would appear to be an issue with the ESX iSCSI initiator, but i have set all options iops to 1, MTU to 9000 etc...

Regards

Re: Expected Speeds

Posted: Wed Jan 08, 2014 1:14 am

by CCSNET_Steve

do you need anything further from myself to help diagnose the issue ?

regards

Re: Expected Speeds

Posted: Wed Jan 08, 2014 3:50 pm

by Anatoly (staff)

Have you benchmarked the network please?

Re: Expected Speeds

Posted: Sat Jan 11, 2014 6:28 pm

by CCSNET_Steve

apologies but do you mean via a Ramdisk connection , or another benchmark ?

regards

Re: Expected Speeds

Posted: Mon Jan 13, 2014 4:53 pm

by Anatoly (staff)

RAM disk will work like a charm, but you can use iperf or ntttcp tool from MS if you wish.

Re: Expected Speeds

Posted: Sat Jan 18, 2014 12:09 am

by CCSNET_Steve

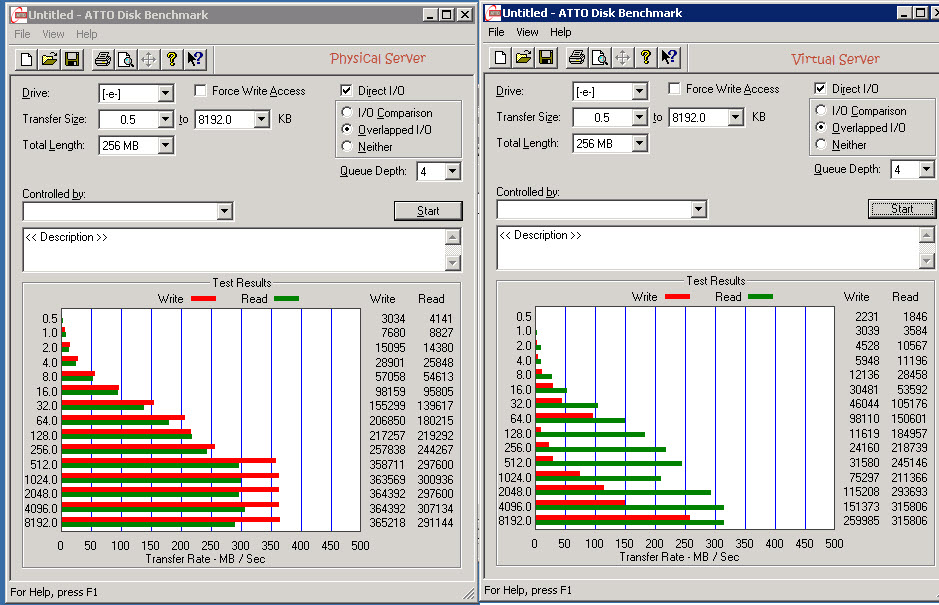

I have tested a RAM Disk on

a) a physical server (dell 1950 iii) quad 1gb links in mpio

b) a virtual server (hosted on our ESX 4.1 box) quad 1Gb Links, Round robin mpio set to 1

Both have jumbo frames enabled

- RamDisk Side By Side.jpg (186.09 KiB) Viewed 15566 times

I can't see what else i can do, it appears the disks are fine, it appears the network is fine, the speed appears to be capable on the ESX (the 8096 shows 350/300 and 250/300)

I'm fresh out of ideas, and have spent a little over a month trying to get this sorted, any ideas would be great

Regards

Re: Expected Speeds

Posted: Tue Jan 21, 2014 12:25 pm

by Anatoly (staff)

Two questions for now:

*Can you confirm that you have used GPT formatting instead of MBR on every layel that is involved into the test?

*Can I ask if you have some additional Windows Server box that you can use to run the IOmeter (that should exclude the problems in the virtualization layer) ?

Thank you