Page 1 of 2

ESXi Round Robin performace worse that fixed path

Posted: Tue Oct 30, 2012 4:01 pm

by craggy

I am evaluating Starwind as an alternative to Nexenta and I have run in to some teething problems.

I have an ESXi 5 Cluster running on HP BL460c G1 blades. Each blade has 2 integrated Broadcom gigabit nics and an additional NC320m (Intel Dual Port nic). The broadcom nics are used for administration and management, the intel nics are used for dual iScsi paths to the storage server. Each NIC has its own switch and vmkernel interface configured with different subnets. We have 2x GBE2c switches, 1 for each iScsi interface. So config is as follows.

ESXi Host:

Nic1 Broadcom Wan and MAnagement (80.9x.xx.xx subnet) vSwitch 1, Vmkernel1

Nic2 Broadcom Admin and Vmotion (10.10.1.x/24 subnet) Vswitch 2, vmkernel 2

Nic3 Intel iScsi (10.10.10.x/24 subnet) vSwitch 3, vmkernel 3

Nic4 Intel iScsi 2 (10.10.11.x subnet) vSwitch 4, Vmkernel 4

Storage Host:

Nic1 Broadcom Wan and MAnagement (80.9x.xx.xx subnet) vSwitch 1, Vmkernel1

Nic2 Broadcom Admin and Vmotion (10.10.1.x/24 subnet) Vswitch 2, vmkernel 2

Nic3 Intel iScsi (10.10.10.x/24 subnet) vSwitch 3, vmkernel 3

Nic4 Intel iScsi 2 (10.10.11.x subnet) vSwitch 4, Vmkernel 4

Jumbo frames enabled accross the board. IOPS set to 1, Delayed ACK enabled.

Nexenta runs on a BL 460c G1 blade with 2x dual cores and 16GB Ram with an SB40c storage blade running 6x HP 15k SAS disks.

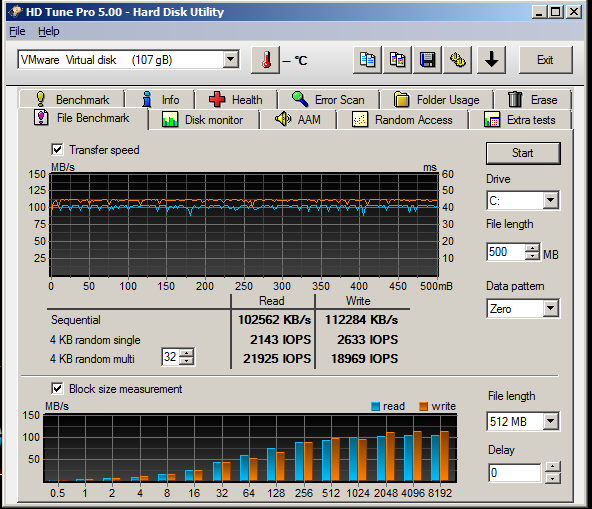

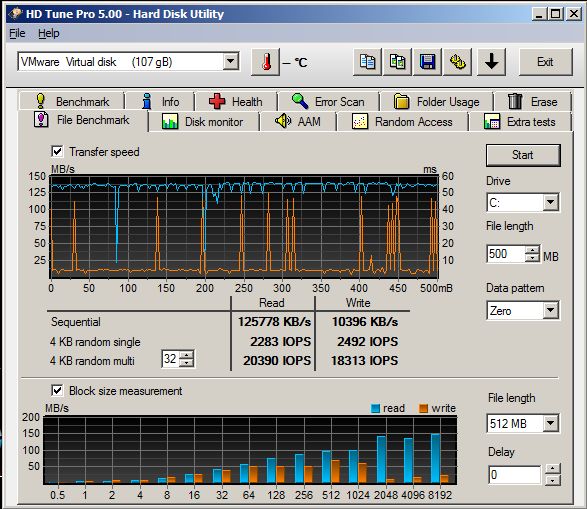

With Nexenta using RoundRobin I can get consistent 220MBps Write and 216MBps Read from a windows 2008 R2 VM running on any of the blades but once I switch to Starwind the performance is really inconsistent and write speeds are very poor. varying from 20MBps to 100MBps but vfery erratic. If I change IOPS back to 1000 it behaves a bit better but still nowhere near the Nexenta performance. If I disable RoundRobin and use Fixed path it behaves a lot like I would expect from a single gigabit link (Locally on the Starwind Server running a disk benchmark I can get between 300MBps and 400MBps read and write so I know its not a storage driver issue or something similar.

Fixed Path

- fixed.png (38.77 KiB) Viewed 22668 times

Round Robin

- roundrobin.png (46.29 KiB) Viewed 22669 times

Any help would be appreciated.

Re: ESXi Round Robin performace worse that fixed path

Posted: Wed Oct 31, 2012 10:37 pm

by craggy

Anybody any suggestions?

Re: ESXi Round Robin performace worse that fixed path

Posted: Thu Nov 01, 2012 4:17 pm

by Max (staff)

Hi Craggy,

The first suggestion will be looking deeper into the SAN's settings.

What is the version of StarWind you're currently running?

Is StarWind launched on the same hardware as Nexenta, what's the OS you're using?

I'd suggest to disable delayed ACK's just based on the graphs, but details may shed more light on the situation

Re: ESXi Round Robin performace worse that fixed path

Posted: Thu Nov 01, 2012 8:56 pm

by craggy

Hi,

The Starwind is version 6.0.4837. It is running on absolutely identical hardware as the Nexenta install.

I tried disabling delayed ack but it made no noticeable difference.

OS version is server 2008 R2. I tried with latest updates installed inc SP1. Also latest intel Nic drivers have been installed and latest HP Smart Array P400 firmware and drivers installed but none of this made any difference either.

Re: ESXi Round Robin performace worse that fixed path

Posted: Fri Nov 02, 2012 10:23 am

by Max (staff)

Ok, let's take the disk subsystem out of the equation -

Could you please create a 4 GB RAM device and connect it to the VM the same way as you did for the regular image file.

Then run another benchmark - if the writes are still spiky and uneven then the problem is a bit deeper than just SW

Re: ESXi Round Robin performace worse that fixed path

Posted: Fri Nov 02, 2012 11:33 am

by craggy

I created a Ram Disk and ran the same tests and the results were almost identical.

Re: ESXi Round Robin performace worse that fixed path

Posted: Sun Nov 04, 2012 9:03 pm

by craggy

Any ideas where to go from here?

Re: ESXi Round Robin performace worse that fixed path

Posted: Mon Nov 05, 2012 1:14 am

by Max (staff)

Good news - it's not StarWind then.

Bad news - it's something in the network between the servers.

First - set the TcpAckFrequency parameter to 1 on all iSCSI interfaces, then re-run the RAM benchmark test.

If the issue gets reproduced - switch to the Fixed path MPIO policy and re-run the benchmark test using each of the links.

This will allow us to pinpoint the problematic connection.

Also, could you please tell me if you've enabled jumbo frames or any non-default networking settings on any side (SAN-Switch-vSphere)

Especially:

netsh int tcp set heuristics disabled

netsh int tcp set global autotuninglevel=normal

netsh int tcp set global congestionprovider=ctcp

netsh int tcp set global ecncapability=enabled

netsh int tcp set global rss=enabled

netsh int tcp set global chimney=enabled

netsh int tcp set global dca=enabled

Re: ESXi Round Robin performace worse that fixed path

Posted: Mon Nov 05, 2012 5:18 pm

by Bohdan (staff)

Please try the following settings and let us know about the results

esxcli storage nmp device list

esxcli storage nmp psp roundrobin deviceconfig set -d eui.47a940de4d7190c4 -I 1 -t iops

replace eui.47a940de4d7190c4 with your StarWind device id

rescan iSCSI software adapter

iSCSI software adapter -> Properties. Network Configuration tab. Remove all VMkernel Port Bindings.

Run HD tune test inside the VM.

Please try also ATTO Disk Benchmark. With the following settings: select the drive which is on StarWind datastore, Overlapped I/O, Queue Depth 10.

Re: ESXi Round Robin performace worse that fixed path

Posted: Fri Nov 09, 2012 11:44 pm

by craggy

Thanks for the replys but none of the above has made it any better.

I really dont think this is a network or hardware issue. My blades all connect to nexenta SANs and an Openfiler backup server without any issues. The only iScsi data store with poor performance with Round Robin is the Starwind one.

I've even tried moving the OS disks and Raid 5 array to another Blade server and storage blade te rule out a hardware issue but it made no difference.

Re: ESXi Round Robin performace worse that fixed path

Posted: Mon Nov 12, 2012 1:20 pm

by Anatoly (staff)

I`m not trying to be rude, but the fact that the network-related reason of the issue wasn`t identified doesn`t mean that it is not so - it can be even cables (btw, have you tried to replace them)? Also, what RAID is used on the OF and SW servers for sharing purposes?

I will additionally ask you to provide us with detailed network diagram of your system.

Thank you.

Re: ESXi Round Robin performace worse that fixed path

Posted: Tue Nov 13, 2012 9:13 pm

by craggy

For this installation there are no network cables. Everything is on blade servers going through GBE2c blade switches internally within the blade enclosere.

I have tested each link separately and get full performance as expected but once i change to round robin using bith links at the same time thats when the problem arises.

To investigate further I have installed a brand new copy of server 2008 R2 and Starwind on a spare HP DL320s storage server running a dual core xeon, 8GB ram and a P800 raid controller with 6x 15k SAS disks in raid 5. I added a dual port intel Pro 1000 nic for the two iScsi links and the problem is exactly as before.

As for the raid in the nexenta servers its 6x 15k SAS disks in Raid 5 on a P400 controller and a dual port Intel Pro 1000 nic for iScsi links.

Re: ESXi Round Robin performace worse that fixed path

Posted: Wed Nov 14, 2012 4:53 pm

by CaptainTaco

Good day,

Not sure if this will help or not, but I ran into this problem awhile back. The exact same symptoms, high (proper) read performance and abysmal write performance. The biggest difference with my issue is I was running over a Cisco 2960-S switch, the starwind software running behind a 10Gig SFP+ connection and the ESXi servers running through 4x Gigabit connections, multipathing enabled, IOPS set to 1.

What I eventually figured out was the problem was not limited to just running with round robin multipathing, but that it was a problem over the individual gigabit lines, and quite random. Sometimes it would be present, and sometimes it would not. What I eventually discovered was the problem was caused by QoS settings on the switch itself. Since you are not going through a physical switch, the problem may not be the same, but I figured I would throw it out there anyways. Once the entire QoS buffer was dedicated to data and QoS itself disabled, the problem disappeared. I have since gone to just using 10 Gigabit connections, bypassing the switch completely and direct connecting to the SAN using the Gigabit connections as fail-over, but I did do extensive testing and confirmed the issue resolved.

I didn't go into great detail here as I am not sure the problem is the same, but the symptoms certainly are judging by the reports you placed in your original post. If you want additional details, at some point I can provide them.

Re: ESXi Round Robin performace worse that fixed path

Posted: Fri Nov 16, 2012 10:07 am

by anton (staff)

Thank you for clarification. We suspect it's something inside NIC drivers on either side (ESXi initiator or Windows target) as other OSes don't suffer from this issue. Trying' to have a remote session to figure out what's broken.

CaptainTaco wrote:Good day,

Not sure if this will help or not, but I ran into this problem awhile back. The exact same symptoms, high (proper) read performance and abysmal write performance. The biggest difference with my issue is I was running over a Cisco 2960-S switch, the starwind software running behind a 10Gig SFP+ connection and the ESXi servers running through 4x Gigabit connections, multipathing enabled, IOPS set to 1.

What I eventually figured out was the problem was not limited to just running with round robin multipathing, but that it was a problem over the individual gigabit lines, and quite random. Sometimes it would be present, and sometimes it would not. What I eventually discovered was the problem was caused by QoS settings on the switch itself. Since you are not going through a physical switch, the problem may not be the same, but I figured I would throw it out there anyways. Once the entire QoS buffer was dedicated to data and QoS itself disabled, the problem disappeared. I have since gone to just using 10 Gigabit connections, bypassing the switch completely and direct connecting to the SAN using the Gigabit connections as fail-over, but I did do extensive testing and confirmed the issue resolved.

I didn't go into great detail here as I am not sure the problem is the same, but the symptoms certainly are judging by the reports you placed in your original post. If you want additional details, at some point I can provide them.

Re: ESXi Round Robin performace worse that fixed path

Posted: Fri Nov 16, 2012 10:09 am

by anton (staff)

OK, our engineers had contacted you to have a remote session so we could check everything ourselves and try to find out the solution. Please check your e-mail. Thanks!

craggy wrote:For this installation there are no network cables. Everything is on blade servers going through GBE2c blade switches internally within the blade enclosere.

I have tested each link separately and get full performance as expected but once i change to round robin using bith links at the same time thats when the problem arises.

To investigate further I have installed a brand new copy of server 2008 R2 and Starwind on a spare HP DL320s storage server running a dual core xeon, 8GB ram and a P800 raid controller with 6x 15k SAS disks in raid 5. I added a dual port intel Pro 1000 nic for the two iScsi links and the problem is exactly as before.

As for the raid in the nexenta servers its 6x 15k SAS disks in Raid 5 on a P400 controller and a dual port Intel Pro 1000 nic for iScsi links.