Public beta (bugs, reports, suggestions, features and requests)

Moderators: anton (staff), art (staff), Max (staff), Anatoly (staff)

-

robnicholson

- Posts: 359

- Joined: Thu Apr 14, 2011 3:12 pm

Fri Feb 21, 2014 6:37 pm

Where is the calculator to allow one to determine how much memory is needed for a specific disk size with dedupe? I'm just experimenting in the lab and it says I need a massive 11GB for a 1TB disk - please tell me this isn't what's really needed?

One of the compelling reasons for looking at StarWind v8 is the deduplication option specifically for use with DPM. Our storage array is already 20TB and if that 1TB = 11GB scales up linear then we'd need a server with 220GB of memory! That's just in the ridiculous category

Our primary disk system is 12TB and even then 128GB of RAM just for deduplication sounds like the algorithm doesn't scale to large disk systems.

Cheers, Rob.

-

robnicholson

- Posts: 359

- Joined: Thu Apr 14, 2011 3:12 pm

Fri Feb 21, 2014 6:38 pm

PS. It also means I can't trial StarWind with DPM as there is no way I can build a lab with 256GB of RAM

Cheers, Rob.

-

anton (staff)

- Site Admin

- Posts: 4010

- Joined: Fri Jun 18, 2004 12:03 am

- Location: British Virgin Islands

-

Contact:

Fri Feb 21, 2014 8:43 pm

StarWind deduplication is a) very small block (4KB) and b) in-line. It's designed for a primary VM workload (to host running virtual machines). Something Windows cannot do out of box and with an off-line implementation would never do. So our dedupe is not "one size fits all" and it's a very bad choice for an ice cold data (typical backup) that in the same time handled quite nice with MS built-in dedupe. Key point here: use StarWind + LSFS for running VMs and use MS build-in dedupe for backups.

P.S. We're not trying to compete with MSFS rather we provide a complimentary solution "fixing holes in MSFT product strategy" (c) ...

Regards,

Anton Kolomyeytsev

Chief Technology Officer & Chief Architect, StarWind Software

-

anton (staff)

- Site Admin

- Posts: 4010

- Joined: Fri Jun 18, 2004 12:03 am

- Location: British Virgin Islands

-

Contact:

Fri Feb 21, 2014 8:49 pm

Good news: we're woking on a snapshots for FLAT (Image File) containers as well. Primary storage would keep up-to-date copy of data and actual

snapshots would be off-loaded to a dedicated snapshot database nodes (that would allow you to have SSD or SAS of a limited capacity on a primary

storage nodes and cheap SATA of a bigger capacity on a snapshot database nodes). With VSS supported of course. Should be ready with RC (one

of the reasons we've slowed down RC as we have issues with this implementation and async replication as well). Stay tuned

robnicholson wrote:PS. It also means I can't trial StarWind with DPM as there is no way I can build a lab with 256GB of RAM

Cheers, Rob.

Regards,

Anton Kolomyeytsev

Chief Technology Officer & Chief Architect, StarWind Software

-

robnicholson

- Posts: 359

- Joined: Thu Apr 14, 2011 3:12 pm

Wed Feb 26, 2014 6:51 pm

I appreciate you're hitting here primarily for performance but there is a big requirement for secondary and maybe even tertiary storage for long term backup where performance isn't quite such a big deal.

So maybe one for the future - same algorithms but don't insist that the deduplication hash has to be in memory - do a bit of a hybrid and keep some cached in memory but the rest in on disk. It would work in exactly the same way but obviously much slower. I'm willing to trade off performance for a much reduced memory requirement.

However, this might be moot anyway. I've only recently discovered that DPM 2012 can only keep something like 64 recovery points and therefore is useless for long term backup windows. Unless you have two backup schedules - a normal one running a few times a day for short term recovery and another one that does a monthly backup. But with DPM that means you need at least twice the disk space to keep two replicas. So basically, forget DPM even with deduplication for long term backups. We were considering AppAssure anyway which has deduplication baked in plus it handles historical backups in a much clever way.

For deduplication of our primary data (our big file share), we could consider turning on Windows 20120 deduplication which worked pretty well in the lab.

Cheers, Rob.

-

anton (staff)

- Site Admin

- Posts: 4010

- Joined: Fri Jun 18, 2004 12:03 am

- Location: British Virgin Islands

-

Contact:

Wed Feb 26, 2014 9:05 pm

What value will StarWind deduplication provide compared to a free Windows built-in dedupe?

Not a big fan of DPM, AppAss is <...> you may try VEEAM for VM backups or Acronis if you need to backup hybrid infrastructure.

robnicholson wrote:I appreciate you're hitting here primarily for performance but there is a big requirement for secondary and maybe even tertiary storage for long term backup where performance isn't quite such a big deal.

So maybe one for the future - same algorithms but don't insist that the deduplication hash has to be in memory - do a bit of a hybrid and keep some cached in memory but the rest in on disk. It would work in exactly the same way but obviously much slower. I'm willing to trade off performance for a much reduced memory requirement.

However, this might be moot anyway. I've only recently discovered that DPM 2012 can only keep something like 64 recovery points and therefore is useless for long term backup windows. Unless you have two backup schedules - a normal one running a few times a day for short term recovery and another one that does a monthly backup. But with DPM that means you need at least twice the disk space to keep two replicas. So basically, forget DPM even with deduplication for long term backups. We were considering AppAssure anyway which has deduplication baked in plus it handles historical backups in a much clever way.

For deduplication of our primary data (our big file share), we could consider turning on Windows 20120 deduplication which worked pretty well in the lab.

Cheers, Rob.

Regards,

Anton Kolomyeytsev

Chief Technology Officer & Chief Architect, StarWind Software

-

Delo123

- Posts: 30

- Joined: Wed Feb 12, 2014 5:15 pm

Wed Feb 26, 2014 10:23 pm

Hi Rob,

I can confirm Fileservers are good candidates for 2012 Dedupe.

2012R2 is even much more quicker and uses less cpu.

For "safety" the only thing we changed is the number of duplicates that are kept by windows.

"Any chunk that is referenced more than x times (100 by default) will be kept in a second location".

We changed this to 50. Will use more storage of course, but made us "feel better".

Even so i think resuls are great! (this server is still on 2012, not R2)

We "saved" over 6TB on a 3TB Volume. Mostly Datawarehouse statistical Data.

PS C:\Windows\system32> get-dedupstatus

FreeSpace SavedSpace OptimizedFiles InPolicyFiles Volume

--------- ---------- -------------- ------------- ------

1.32 TB 6.28 TB 77227 77227 G:

PS C:\Windows\system32> get-dedupstatus |fl

Volume : G:

VolumeId : \\?\Volume{ef3cf420-4597-4dc0-a0c1-8b57192e44ac}\

Capacity : 3 TB

FreeSpace : 1.32 TB

UsedSpace : 1.68 TB

UnoptimizedSize : 7.96 TB

SavedSpace : 6.28 TB

SavingsRate : 78 %

OptimizedFilesCount : 77227

OptimizedFilesSize : 7.94 TB

OptimizedFilesSavingsRate : 79 %

InPolicyFilesCount : 77227

InPolicyFilesSize : 7.94 TB

LastOptimizationTime : 12/28/2013 9:45:12 PM

LastOptimizationResult : 0x00000000

LastOptimizationResultMessage : The operation completed successfully.

LastGarbageCollectionTime : 12/28/2013 2:47:15 AM

LastGarbageCollectionResult : 0x00000000

LastGarbageCollectionResultMessage : The operation completed successfully.

LastScrubbingTime : 12/28/2013 3:46:35 AM

LastScrubbingResult : 0x00000000

LastScrubbingResultMessage : The operation completed successfully.

Regards,

Guido

-

anton (staff)

- Site Admin

- Posts: 4010

- Joined: Fri Jun 18, 2004 12:03 am

- Location: British Virgin Islands

-

Contact:

Wed Feb 26, 2014 10:34 pm

That's the point. I don't want to waste time doing something better is MS dedupe is GOOD ENOUGH for ice cold data.

Regards,

Anton Kolomyeytsev

Chief Technology Officer & Chief Architect, StarWind Software

-

fbifido

- Posts: 125

- Joined: Thu Sep 05, 2013 7:33 am

Mon Mar 03, 2014 3:25 pm

anton (staff) wrote:That's the point. I don't want to waste time doing something better is MS dedupe is GOOD ENOUGH for ice cold data.

I am using vSphere 5.5, do we enable windows server 2012 R2 dedup in the VM or on the storage server that is running SW iSCSI SAN or both ?

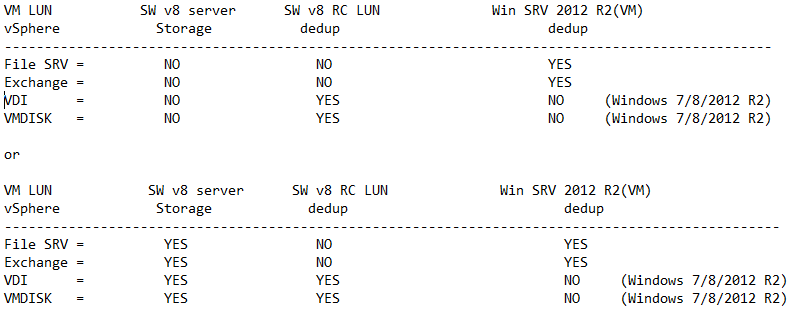

VM LUN SW v8 server SW v8 RC LUN Win SRV 2012 R2(VM)

vSphere Storage dedup dedup

----------------------------------------------------------------------------------------------

File server = NO NO YES

Exchange = NO NO YES

VDI = NO YES NO (Windows 7/8/2012 R2)

VMDISK = NO YES NO (Windows 7/8/2012 R2)

or

VM LUN SW v8 server SW v8 RC LUN Win SRV 2012 R2(VM)

vSphere Storage dedup dedup

----------------------------------------------------------------------------------------------

File server = YES NO YES

Exchange = YES NO YES

VDI = YES YES NO (Windows 7/8/2012 R2)

VMDISK = YES YES NO (Windows 7/8/2012 R2)

- vm_dedup_q&a.png (13.74 KiB) Viewed 9314 times

-

anton (staff)

- Site Admin

- Posts: 4010

- Joined: Fri Jun 18, 2004 12:03 am

- Location: British Virgin Islands

-

Contact:

Mon Mar 03, 2014 9:31 pm

StarWind is not the best choice for your file server as Windows dedupe can handle ice cold data just fine. StarWind is primary VM workload where MSFT just sucks (not supported config), VDI (MSFT does play here but is real weak) and SQL Server and Exchange.

Regards,

Anton Kolomyeytsev

Chief Technology Officer & Chief Architect, StarWind Software